Azure Data Factory: Download File from HTTP API to Data Lake in 10 minutes

- Roman Guoussev-Donskoi

- Oct 12, 2021

- 2 min read

Updated: Oct 27, 2021

Azure Data Factory has quickly outgrown initial use cases of "moving data between data stores". As ADF matured it has quickly become data integration hub in Azure cloud architectures.

This post demonstrates how incredibly easy is to create ADF pipeline to authenticate to external HTTP API and download file from external HTTP API server to Azure Data Lake Storage Gen2.

Have been using ADF for some time but by no means I qualify as ADF expert. The purpose of this post is to demonstrate how easy to pickup and leverage new Data Factory capabilities beyond traditional "database, storage account, etc." copy activities even for Data Factory "occasional user". Many Thanks to David Ma from Microsoft for guiding us on this!

Prerequisites: Assuming you already have created Azure Data Factory and Azure Data Lake Storage Gen2.

Lets get to it. :)

Open ADF Studio

Select "Author" blade and create a new pipeline

Name the Pipeline

Add Web Activity to Pipeline (drag it onto canvas)

Supply HTTP API URL and in our case username and password for authentication are in POST request body (but there are many other ways to configure authentication in ADF. Thinking will try and cover in a separate post.)

Click "Debug" to test and note in our case in response JSON element "ticket" containing the authentication token will use in subsequent file download request.

Now lets create linked service to use for file download.

Select "HTTP" for linked service type.

Supply HTTP API endpoint URL and select integration runtime (ADF IR) that has is capable to access this HTTP API endpoint URL.

For authentication type we will select "Anonymous" as will configure authentication in custom HTTP header in subsequent steps.

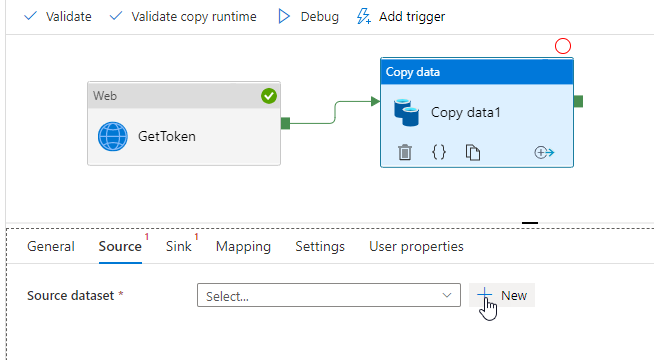

Now lets go back to "Author" blade and add "Copy Data" activity to pipeline. Drag it to canvas and connect to follow our Web activity.

Create new source data set for "Copy Data" activity.

Select type ``HTTP" and binary format

Select linked service we have previously created for HTTP API and relative URL for specific file download.

Configure authentication header leveraging token that has been received in response to previous Web activity in the pipeline.

In screenshot below simply selecting "GetToken activity output" (output of previous activity) will add it to HTTP header as dynamic content.

All that remains is to specify that token will be contained in "ticket" element of JSON response (as we noted when tested Web activity earlier)

Now lets configure sink for copy data activity.

Create new Data Lake Gen 2 dataset

Select binary format

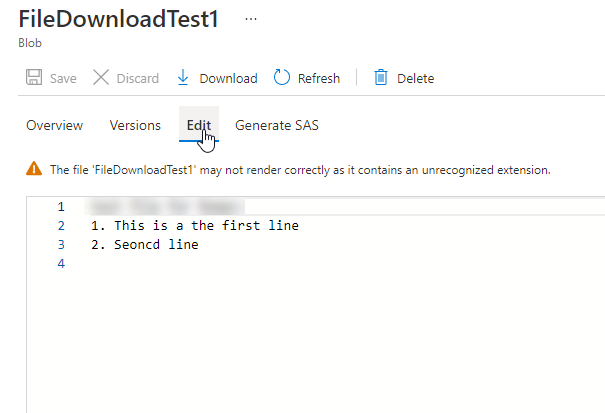

Select Linked service we have created earlier and specify folder and the name of the file that will contain the downloaded file data.

Hit "Debug" and validate both activities completed successfully.

Use icons next to activity name to see input, output, and execution details for each activity.

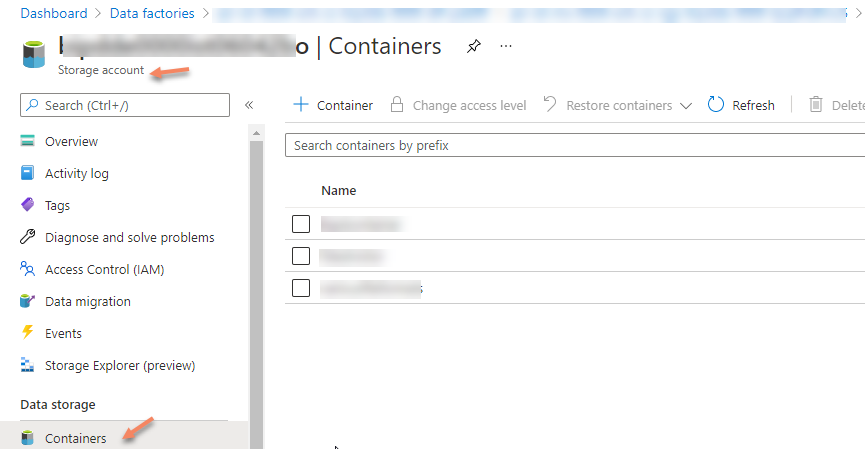

Now go to Azure Data Lake storage account to validate the file has been successfully downloaded.

That is all.

Hope you find this useful and enjoy working with Azure Data Factory :)

Hi Roman,

First I would like to thank you for this post.

This post detail really helped me for archived files or attachment from Salesforce to Azure blob storage. I am not expert on azure but I could easily configure all the steps as you mentioned above.

Thanks,

Vikram